Why LLMs Will Never be Creative

LLMs are bad at writing original jokes, output “ai slop”, and LLM agents frequently get stuck. If you are a programmer that has used AI it’s almost certain that you have experienced this lack of creative problem-solving. That is because humor, writing interesting articles, and thinking about good programming solutions requires creativity. I refer to the term creativity as how Quantum Physicist and Philosopher David Deutsch defined it: As the ability to create new knowledge through conjecture and criticism.

He explains how conjecture and criticism are analogous to variation and selection in evolution. This makes the growth of knowledge an evolutionary process. Creativity is the most powerful piece of software in the entire universe, as it allows us to create knowledge, solve problems, and transform entire solar systems1.

Try asking AGI-soon-proponents: Would you have to prompt it? LLMs, lacking agency, disobedience, and creativity cannot rival or threaten humans. Thus fearing them is irrational. While LLMs can be misused to persuade, defraud, hack, or design bioweapons, they also enable protection. For instance, an LLM might suggest a DDoS attack, but human creativity, augmented by LLMs, can devise novel defenses like adaptive firewalls.

Claiming that LLMs will somehow gain creativity is prophecy. A prediction draws conclusions about future events that follow from good explanations. A prophecy is something that purports to know what is not yet knowable, such as the invention of a creative program. Moore’s law is a prediction based on an explanation of engineering density scaling principles (photolithography advancements, material science innovations, and power-efficiency trends). The claim that “more AI researchers working on improving LLMs will lead to creativity” is not a good explanation. AI scaling laws predict performance (e.g., loss) from larger models, more data, and compute, not creativity, while test-time compute scaling enhances reasoning during inference but still lacks creative conjecture.

AlphaEvolve, a recent Google advancement, optimizes algorithms like matrix multiplication within a defined search space2 using clear evaluation metrics, unable to independently conjecture new problems or solutions as humans do.

Expecting to create a program that is creative without first understanding in detail how creativity works is like expecting skyscrapers to learn to fly if we build them tall enough. The inventors of the steam engine Savery and Newcomen, while relying on experimentation and mechanical intuition, had rudimentary and intuitive knowledge of thermodynamics. Similarly, the Wright brothers understood aerodynamics before inventing airplanes.

Machine learning fundamentally works by applying inductive probability to the occurrence of tokens in its training corpus3. But induction can’t create new knowledge4. For centuries Europeans thought all swans were white. In 1697, Dutch explorers discovered black swans in Australia. Inducing that all swans are white didn’t create new knowledge. Similarly, recently LLMs failed to generate a picture of a wine glass that is full to the brim. The LLM fundamentally lacks an understanding of these concepts and can’t conjecture how a full-to-the-brim wine glass would look like without having seen hundreds of labelled examples. In the below illustration the full-to-the-brim wine glass and reverse-traffic lights are black swans in the LLM’s training data.

This video explains a great watches prompt example:

The prompt is:

Draw a group of watches, showing 3 minutes after 12.

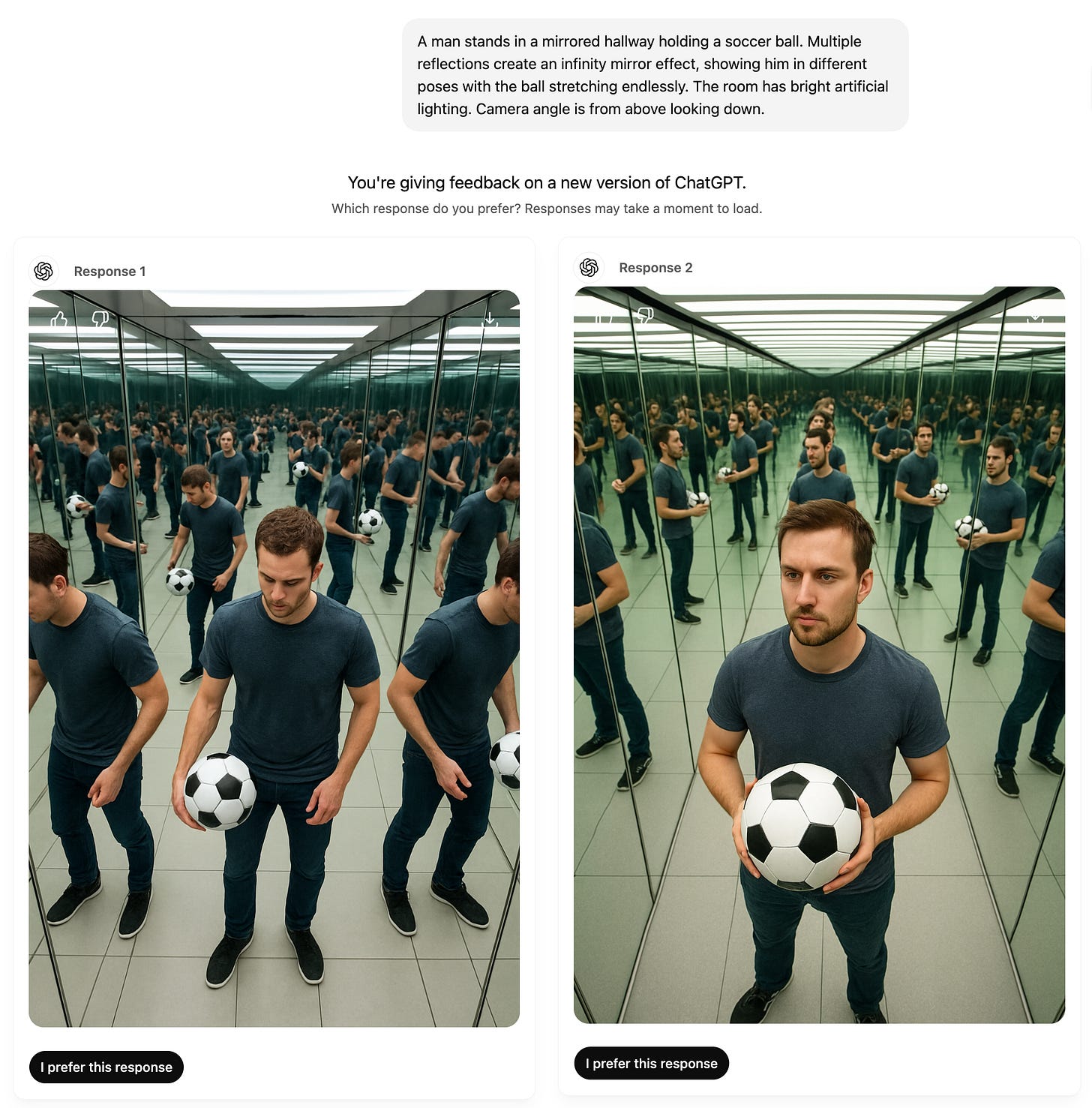

I also came up with this “Double Mirrored hallway”-example. The prompt is:

A man stands in a mirrored hallway holding a soccer ball. Multiple reflections create an infinity mirror effect, showing him in different poses with the ball stretching endlessly. The room has bright artificial lighting. Camera angle is from above looking down.

“LLM-intelligence” is different from human intelligence. As Peter Thiel said

Computers are tools, not rivals. [...] As computers become more and more powerful, they won’t be substitutes for humans: they’ll be complements.

Augmentation is thus more effective than a complete LLM-replacement for tasks that require creativity. This is the reason Cursor (AI IDE) is overall a more successful product than Devin (AI coding agent). If you have ever used Cursor’s agent mode for difficult (debugging) problems without intervening or guiding it, you will notice that it tends to get stuck. This is because it cannot creatively conjecture solutions5.

Thanks to Tom Walczak, James Bergust, Vaden Masrani, Logan Chipkin, Simon Berens, Laser Nite, and others for giving feedback on drafts of this.

The knowledge of how to create a fire solves the problem of freezing. Knowledge of how to control genetics will solve the problem of cancer. The knowledge of engineering nuclear-powered spacecraft and asteroid/planetary mining allows us to transform solar systems.

Inspired by Machine learning researcher Kenneth Stanley, I wrote about open-ended search processes on my blog before.

David Deutsch, following Popper, critiques induction—generalizing from observations—as unable to create new knowledge. Knowledge grows through creative conjecture and criticism, not data patterns. Induction assumes the future mirrors the past, but theories like Newton’s arise from imaginative guesses, tested rigorously, not repeated observations.

I elaborate on this point here:

Why All Job Displacement Predictions are Wrong: Explanations of Cognitive Automation

Most predictions about job displacement through large language models (LLMs) are wrong. This is because they don’t have good explanations of how LLMs and human intelligence differ from each other and how they interact with our evolving economy.